Source data type and target data type should be same, Length of data types in both source and target should be equal, Verify that data field types and formats are specified, Source data type length should not less than the target data type length. To start with, setup of test data for updates and inserts is a key for testing Incremental ETL. Try ETL Validator free for 14 days or contact us for a demo. By following the steps outlined above, the tester can regression test key ETLs. Data is transformed during the ETL process so that it can be consumed by applications on the target system. Change in the data source or incomplete/corrupt source data. Verify the null values, where Not Null is specified for a specific column. Also, Regression Testing is performed to ensure there are no new bugs introduced while fixing the earlier one. Metadata testing includes testing of data type check, data length check and index/constraint check. Instances of fields containing values violating the validation rules defined represent a quality gap that can impact ETL processing. It Verifies for mapping doc whether the corresponding ETL data is provided or not. Number check: Need to number check and validate it. While most of the data completeness and data transformation tests are relevant for incremental ETL testing, there are a few additional tests that are relevant. Example: Write a source query that matches the data in the target table after transformation.Source Query, SELECT fst_name||,||lst_name FROM Customer where updated_dt>sysdate-7Target QuerySELECT full_name FROM Customer_dim where updated_dt>sysdate-7. Compare the results of the transformed test data with the data in the target table. The tester is tasked with regression testing the ETL. Download your 14 day free trial now. Come with the transformed data values or the expected values for the test data from the previous step. databases, flat files).

Ensure that all expected data is loaded into target table. ETL Validator comes withBaseline & Compare WizardandData Rules test planfor automatically capturing and comparing Table Metadata. For a data migration project, data is extracted from a legacy application and loaded into a new application. However, performing 100% data validation is a challenge when large volumes of data is involved. ETL Validator comes withMetadata Compare Wizardfor automatically capturing and comparing Table Metadata. When the data volumes were low in the target table, it performed well but when the data volumes increased, the updated slowed down the incremental ETL tremendously. Here are the different phases involved in the ETL Testing process: The primary responsibilities of an ETL Tester can be classified into one of the following three categories: Here are a few pivotal responsibilities of an ETL Tester: Here are a few situations where ETL Testing can come in handy: ETL Testing is the process that is designed to verify and validate the ETL process in order to reduce data redundancy and information loss.  Verify that the changed data values in the source are reflecting correctly in the target data. Compare data in the target table with the data in the baselined table to identify differences. Boundary Value Analysis (BVA) related bug, Equivalence Class Partitioning (ECP) related bug, Verifies whether data is moved as expected, The primary goal is to check if the data is following the rules/ standards defined in the Data Model, Verifies whether counts in the source and target are matching, Verify that there are no orphan records and foreign-primary key relations are maintained, Verifies that the foreign primary key relations are preserved during the ETL, Verifies that there are no redundant tables and database is optimally normalized, Verify if data is missing in columns where required, Transform data to DW (Data Warehouse) format, Build keys A key is one or more data attributes that uniquely identify an entity. The source and target databases, mappings, sessions and the system possibly have performance bottlenecks. Validate Reference data between spreadsheet and database or across environments. ETL Testing comes into play when the whole ETL process needs to get validated and verified in order to prevent data loss and data redundancy. Compare count of records of the primary source table and target table. Table balancing or production reconciliation this type of ETL testing is done on data as it is being moved into production systems. Validate the source and target table structure against corresponding mapping doc. These approaches to ETL testing are time-consuming, error-prone and seldom provide completetest coverage. The general methodology of ETL testing is to use SQL scripting or do eyeballing of data.. Some of the parameters to consider when choosing an ETL Testing Tool are given below. The purpose of Data Quality tests is to verify the accuracy of the data. The purpose of Incremental ETL testing is to verify that updates on the sources are getting loaded into the target system properly. Analysts must ensure that they have captured all the relevant screenshots, mentioned steps to reproduce the test cases and the actual vs expected results for each test case. While performing ETL testing, two documents that will always be used by an ETL tester are, Verifies whether the data transformed is as per expectation, Key responsibilities of an ETL tester are segregated into three categories, Some of the responsibilities of an ETL tester are. Compare your output with data in the target table. Benchmarking capability allows the user to automatically compare the latest data in the target table with a previous copy to identify the differences.

Verify that the changed data values in the source are reflecting correctly in the target data. Compare data in the target table with the data in the baselined table to identify differences. Boundary Value Analysis (BVA) related bug, Equivalence Class Partitioning (ECP) related bug, Verifies whether data is moved as expected, The primary goal is to check if the data is following the rules/ standards defined in the Data Model, Verifies whether counts in the source and target are matching, Verify that there are no orphan records and foreign-primary key relations are maintained, Verifies that the foreign primary key relations are preserved during the ETL, Verifies that there are no redundant tables and database is optimally normalized, Verify if data is missing in columns where required, Transform data to DW (Data Warehouse) format, Build keys A key is one or more data attributes that uniquely identify an entity. The source and target databases, mappings, sessions and the system possibly have performance bottlenecks. Validate Reference data between spreadsheet and database or across environments. ETL Testing comes into play when the whole ETL process needs to get validated and verified in order to prevent data loss and data redundancy. Compare count of records of the primary source table and target table. Table balancing or production reconciliation this type of ETL testing is done on data as it is being moved into production systems. Validate the source and target table structure against corresponding mapping doc. These approaches to ETL testing are time-consuming, error-prone and seldom provide completetest coverage. The general methodology of ETL testing is to use SQL scripting or do eyeballing of data.. Some of the parameters to consider when choosing an ETL Testing Tool are given below. The purpose of Data Quality tests is to verify the accuracy of the data. The purpose of Incremental ETL testing is to verify that updates on the sources are getting loaded into the target system properly. Analysts must ensure that they have captured all the relevant screenshots, mentioned steps to reproduce the test cases and the actual vs expected results for each test case. While performing ETL testing, two documents that will always be used by an ETL tester are, Verifies whether the data transformed is as per expectation, Key responsibilities of an ETL tester are segregated into three categories, Some of the responsibilities of an ETL tester are. Compare your output with data in the target table. Benchmarking capability allows the user to automatically compare the latest data in the target table with a previous copy to identify the differences.

To validate the complete data set in source and target table minus a query in a best solution, We need to source minus target and target minus source, If minus query returns any value those should be considered as mismatching rows, Needs to matching rows among source and target using intersect statement, The count returned by intersect should match with individual counts of source and target tables. Validates the source and target table structure with the mapping doc. Data quality testing includes number check, date check, precision check, data check , null check etc. Black-box testing is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. Automate ETL regression testing using ETL ValidatorETL Validator comes with aBaseline and Compare Wizardwhich can be used to generate test cases for automatically baselining your target table data and comparing them with the new data. One of the best tools used for Performance Testing/Tuning is Informatica. Then, validating the documents against the business requirements to ensure it aligns to business needs. Vishal Agrawal on Data Integration, ETL, ETL Testing In order to avoid any error due to date or order number during business process Data Quality testing is done. monthly). The next step involves executing the created test cases on the QA (Question-Answer) environment to identify the types of bugs or defects encountered during Testing. Data loss can occur during migration because of which it is hard to perform source to target reconciliation. Prepare test data in the source systems to reflect different transformation scenarios. One such tool is Informatica. If an ETL process does a full refresh of the dimension tables while the fact table is not refreshed, the surrogate foreign keys in the fact table are not valid anymore. Data model standards dictate that the values in certain columns should adhere to a values in a domain. For example, there is a retail store which has different departments like sales, marketing, logistics etc. It explained the process of Testing, its types, and some of its challenges. Its completely automated pipeline offers data to be delivered in real-time without any loss from source to destination. Often testers need to regression test an existing ETL mapping with a number of transformations. The latest record is tagged with a flag and there are start date and end date columns to indicate the period of relevance for the record. The objective of ETL testing is to assure that the data that has been loaded from a source to destination after business transformation is accurate. The main purpose of data warehouse testing is to ensure that the integrated data inside the data warehouse is reliable enough for a company to make decisions on. Using this approach any changes to the target data can be identified. These differences can then be compared with the source data changes for validation. Although ETL Testing is a very important process, there can be some challenges that companies can face when trying to deploy it in their applications. View or process the data in the target system. Change log should maintain in every mapping doc. In addition to these, this system creates meta-data that is used to diagnose source system problems and improves data quality. (Select the one that most closely resembles your work. Analysts must try to reproduce the defect and log them with proper comments and screenshots. It supports 100+ data sources and is a 3-step process by just selecting the data source, providing valid credentials, and choosing the destination. The steps to be followed are listed below: The advantage with this approach is that the transformation logic does not need to be reimplemented during the testing. The purpose of Metadata Testing is to verify that the table definitions conform to the data model and application design specifications. Setup test data for performance testing either by generating sample data or making a copy of the production (scrubbed) data. Read along to find out about this interesting process. Data profiling is used to identify data quality issues and the ETL is designed to fix or handle these issue. Automating ETL testing can also eliminate any human errors while performing manual checks. Identify the problem and provide solutions for potential issues, Approve requirements and design specifications, Writing SQL queries3 for various scenarios like count test, Without any data loss and truncation projected data should be loaded into the data warehouse, Ensure that ETL application appropriately rejects and replaces with default values and reports invalid data, Need to ensure that the data loaded in data warehouse within prescribed and expected time frames to confirm scalability and performance, All methods should have appropriate unit tests regardless of visibility, To measure their effectiveness all unit tests should use appropriate coverage techniques. Overall, Testing plays an important part in governing the ETL process and every type of company must incorporate it in their business. In this type of Testing, SQL queries are run to validate business transformations and it also checks whether data is loaded into the target destination with the correct transformations. Approve design specifications and requirements. This could be because the project has just started and the source system only has small amount of test data or production data has PII information which cannot be loaded into the test database without scrubbing. Verify that the table was named according to the table naming convention. The goal of these checks is to identify orphan records in the child entity with a foreign key to the parent entity. There are several challenges in ETL testing: Test Triangle offer following testing services: Test Triangle is an emerging IT service provider specializing in Failure to understand business requirements or employees are unclear of the business needs. It may not be practical to perform an end-to-end transformation testing in such cases given the time and resource constraints. This type of Testing confirms that all the sources data has been loaded to the target Data Warehouse correctly and it also checks threshold values. Often changes to source and target system metadata changes are not communicated to the QA and Development teams resulting in ETL and Application failures. Compare record counts between source and target. With the introduction of Cloud technologies, many organizations are trying to migrate their data from Legacy source systems to Cloud environments by using ETL Tools. ETL testing is a concept which can be applied to different tools and databases in information management industry. In a data integration project, data is being shared between two different applications usually on a regular basis. Here, you need to check if there is any duplicate data present in the target system. Verify mapping doc whether corresponding ETL information is provided or not. Performance Testing in ETL is a testing technique to ensure that an ETL system can handle load of multiple users and transactions. ), Difference Between Database Testing and ETL Testing. All Rights Reserved. Look for duplicate rows with same unique key column or a unique combination of columns as per business requirement. are tested. Track changes to Table metadata over a period of time. ETL stands for Extract, Transform and Load and is the process of integrating data from multiple sources, transforming it into a common format, and delivering the data into a destination usually a Data Warehouse for gathering valuable business insights. ETL Validator comes withData Rules Test Plan and Foreign Key Test Planfor automating the data quality testing. Type 2 SCD is designed to create a new record whenever there is a change to a set of columns. Business Intelligence is the process of collecting raw data or business data and turning it into information that is useful and more meaningful. Example: The naming standard for Fact tables is to end with an _F but some of the fact tables names end with _FACT. Example: Data Model column data type is NUMBER but the database column data type is STRING (or VARCHAR). This includes invalid characters, patterns, precisions, nulls, numbers from the source, and the invalid data is reported. Performance of the ETL process is one of the key issues in any ETL project. It Verifies for the counts in the source and target are matching. between source and target systems. Some of the tests that can be run are : Compare and Validate counts, aggregates (min, max, sum, avg) and actual data between the source and target. Identify the Problem and offer solutions for potential issues.

Baseline reference data and compare it with the latest reference data so that the changes can be validated. Due to changes in requirements by the customer, a tester might need to re-create/modify mapping documents and SQL scripts, which leads to a slow process. You can also have a look at the unbeatable pricing that will help you choose the right plan for your business needs. Testing data transformation is done as in many cases it cannot be achieved by writing one source. Verify that the names of the database metadata such as tables, columns, indexes are as per the naming standards. The device is not responding to the application. Example: In a financial company, the interest earned on the savings account is dependent the daily balance in the account for the month. augmentation and training in advanced technologies. Checkout ETL Testing Interview Questions & Answers, Copyright - Guru99 2022 Privacy Policy|Affiliate Disclaimer|ToS, Difference between Database Testing and ETL Testing, ETL Testing Interview Questions & Answers, Data Warehouse Architecture, Components & Diagram Concepts, What is Data Lake? Business Intelligence is defined as the process of collating business data or raw data and converting it into information that is deemed more valuable and meaningful. Writing SQL queries for Count Test-like scenarios. It Verifies that there are no orphan records and foreign-primary key relations are maintained. ETL Validator comes withComponent Test Casethe supports comparing an OBIEE report (logical query) with the database queries from the source and target. The metadata testing is conducted to check the data type, data length, and index. ETL can transform dissimilar data sets into an unified structure.Later use BI tools to derive meaningful insights and reports from this data. ETL Testing is different from application testing because it requires a data centric testing approach. ETL stands for Extract-Transform-Load and it is a process of how data is loaded from the source system to the data warehouse. ETL Testing involves comparing of large volumes of data typically millions of records. Such type of ETL testing can be automatically generated, saving substantial test development time. In regulated industries such as finance and pharmaceutical, 100% data validation might be a compliance requirement. Review the requirements document to understand the transformation requirements. When a source record is updated, the incremental ETL should be able to lookup for the existing record in the target table and update it. Integration testing of the ETL process and the related applications involves the following steps: Example: Lets consider a data warehouse scenario for Case Management analytics using OBIEE as the BI tool. Estimate expected data volumes in each of the source table for the ETL for the next 1-3 years. Here are the steps: Example: In the data warehouse scenario, ETL changes are pushed on a periodic basis (eg. ETL testing made easy with our efficient data validation and progressive automation. Conforming means resolving the conflicts between those datas that is incompatible, so that they can be used in an enterprise data warehouse. Similar to other Testing Process, ETL also go through different phases. Verify that the table and column data type definitions are as per the data model design specifications. Example: Business requirement says that a combination of First Name, Last Name, Middle Name and Data of Birth should be unique.Sample query to identify duplicatesSELECT fst_name, lst_name, mid_name, date_of_birth, count(1) FROM Customer GROUP BY fst_name, lst_name, mid_name HAVING count(1)>1. In case you want to set up an ETL procedure, then Hevo Data is the right choice for you! The data validation testing is used to verify data authenticity and completeness with the help of validation count, and spot checks between target and real-time data periodically. This testing is done to ensure that the data is accurately loaded and transformed as expected. Verify that proper constraints and indexes are defined on the database tables as per the design specifications. Its fault-tolerant and scalable architecture ensure that the data is handled in a secure, consistent manner with zero data loss and supports different forms of data. Typically, the records updated by an ETL process are stamped by a run ID or a date of the ETL run. Review the source to target mapping design document to understand the transformation design. Count of records with null foreign key values in the child table. To verify that all the expected data is loaded in target from the source, data completeness testing is done. Apply transformations on the data using SQL or a procedural language such as PLSQL to reflect the ETL transformation logic. Example 2: Compare the number of customers by country between the source and target. The correct values are accepted the rest are rejected. However, during testing when the number of cases were compared between the source, target (data warehouse) and OBIEE report, it was found that each of them showed different values. Target table loading from stage file or table after applying a transformation. Needs to validate the unique key, primary key and any other column should be unique as per the business requirements are having any duplicate rows. An executive report shows the number of Cases by Case type in OBIEE. Compare the transformed data in the target table with the expected values for the test data.

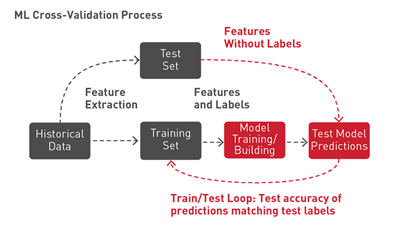

Transformed data is generally important for the target systems and hence it is important to test transformations. Some of the tests that can be run are compare and validate counts, aggregates and actual data between the source and target for columns with simple transformation or no transformation. Define data rules to verify that the data conform to the domain values. Incremental ETL only loads the data that changed in the source system using some kind of change capture mechanism to identify changes. In the modern world today, companies gather data from multiple sources for analysis. The ETL testing is conducted to identify and mitigate the issues in data collection, transformation and storage. Metadata Testing involves matching schema, data types, length, indexes, constraints, etc. Organizations may have Legacy data sources like RDBMS, DW (Data Warehouse), etc. However, the ETL Testing process can be broken down into 8 broad steps that you can refer to while performing Testing: The first and foremost step in ETL Testing is to know and capture the business requirement by designing the data models, business flows, schematic lane diagrams, and reports. In this type of ETL Testing, checks are performed on the Data Quality. These datas will be used for Reporting, Analysis, Data mining, Data quality and Interpretation, Predictive Analysis. Source QuerySELECT cust_id, fst_name, lst_name, fst_name||,||lst_name, DOB FROM Customer, Target QuerySELECT integration_id, first_name, Last_name, full_name, date_of_birth FROM Customer_dim. After logging all the defects onto Defect Management Systems (usually JIRA), they are assigned to particular stakeholders for defect fixing. ETL testing is a data centric testing process to validate that the data has been transformed and loaded into the target as expected. Here, you need to make sure that the count of records loaded within the target is matching with the expected count. Check data should not be truncated in the column of target tables, Compares unique values of key fields between data loaded to WH and source data, Data that is misspelled or inaccurately recorded, Number check: Need to number check and validate it, Date Check: They have to follow date format and it should be same across all records, Needs to validate the unique key, primary key and any other column should be unique as per the business requirements are having any duplicate rows, Check if any duplicate values exist in any column which is extracting from multiple columns in source and combining into one column, As per the client requirements, needs to be ensure that no duplicates in combination of multiple columns within target only, Identify active records as per the ETL development perspective, Identify active records as per the business requirements perspective.