After providing the administrator username and password, we can create our cluster by clicking on the Create cluster in the bottom-right corner.

Schemas organize database objects into logical groups, like directories in an operating system.

Click this button to start specifying the configuration using which the cluster would be built. After selecting the region of your choice, the next step is to navigate to the AWS Redshift home page. Consider exploring this page to check out more details regarding your cluster. If youre unclear what type of commands your users are running, in intermix.io you can select your users and groups, and filter for the different SQL statements/operations.

Click this button to start specifying the configuration using which the cluster would be built. After selecting the region of your choice, the next step is to navigate to the AWS Redshift home page. Consider exploring this page to check out more details regarding your cluster. If youre unclear what type of commands your users are running, in intermix.io you can select your users and groups, and filter for the different SQL statements/operations.

The raw schema is your staging area and contains your raw data.

Write for Hevo.

It provides in-depth knowledge about the concepts behind every step to help you understand and implement them efficiently.

With the free tier type, you get one dc2.large Redshift node with SSD storage types and compute power of 2 vCPUs. I write about the process of finding the right WLM configuration in more detail in 4 Simple Steps To Set-up Your WLM in Amazon Redshift For Better Workload Scalability and our experience withAuto WLM. Assign the transform user group to this queue. redshift, hello@integrate.io If you misconfigure your database, you will run into bottlenecks. Hevo Data, a No-code Data Pipeline, helps you transfer data from a source of your choice in a fully automated and secure manner without having to write the code repeatedly.

The default region in AWS in N. Virginia which you can see in the top-right corner.

But for first-time users who are just getting started with Redshift, they often do not need such high capacity nodes, as this can incur a lot of cost due to the capacity associated with it.

After loading sample data, it will ask for the administrator username and password to authenticate with AWS Redshift securely. When you click on the Run button, it will create a table named Persons with the attributes specified in the query.

Groups also help you define more granular access rights on a group level. It is a managed service by AWS, so you can easily set this up in a short time with just a few clicks.

Applications for your ETL, workflows, and dashboards. AWS offers four different node types for Redshift. The result is that youre now in control. To run the analysis using the Redshift, select the cluster you want and click on query data to create a new query. Databases in the same cluster do affect each others performance. If you dont choose a parameter group, Amazon Redshift will allocate a parameter group by default to your Redshift cluster.

Having filled in all the requisite details, you can click on the Restore button to restore the desired table.

AWS Redshift is a columnar data warehouse service on AWS cloud that can scale to petabytes of storage, and the infrastructure for hosting this warehouse is fully managed by AWS cloud.

By adding intermix.io to your set-up, you get a granular view into everything that touches your data, and whats causing contention and bottlenecks. Click on the Delete button and this will start the deletion process and within a minute or two the AWS Redshift cluster would get deleted. The parameter group will contain the settings that will be used to configure the database. With more granular groups, you can also define more granular access rights. You can even copy the snapshots to another AWS region for resilience. Amazon Redshift supports identity-based policies (IAM policies).

Amazon Redshift Data Sharing Simplified 101, Fundamentals of Redshift Functions: Python UDF & SQL UDF with 2 Examples, A Comprehensive Amazon Redshift Tutorial 101.

Knowing the cluster as a whole is healthy allows you to drill down and focus performance-tuning at the query level.

With the three additional queues next to default, you can now route your user groups to the corresponding queue, by assigning them to a queue in the WLM console.

You can contribute any number of in-depth posts on all things data.

Popular business intelligence products include Looker, Mode Analytics, Periscope Data, and Tableau. Popular schedulers include Luigi, Pinball, and Apache Airflow. Each node can take up to 128 TB of data to process. Once you have that down, youll see that Redshift is extremely powerful. MPP is deemed good for analytical workloads since they require sophisticated queries to function effectively. Privacy Policy and Terms of Use. Finally, we have seen how to easily create a Redshift cluster using the AWS CLI. Also in this category are various SQL clients and tools data scientists use, like datagrip or Python Notebooks. Learn how to leverage data integration for actionable insights in these real-world use cases. By far the most popular option is Airflow.

When using IAM, the URL (cluster connection string) for your cluster will look like this: jdbc:redshift:iam://cluster-name:region/dbname. prod.

With the free tier option, AWS automatically uploads some sample data to your Redshift cluster to help you learn about AWS Redshift. What are the benefits of data integration for business owners? Next, we need to select the Cluster identifier, Database name, and Database user. Type Redshift on the search console as shown below, and you would find the service name listed.

With WLM query monitoring rules, you can ensure that expensive queries caused by e.g.

This will provide you with the details about all the clusters created on your AWS account. Ad-hoc queries are high memory and unpredictable. Only data engineers in charge of building pipelines should have access to this area. finance, analysts, or sales. A group of users with identical privileges. We briefly understood the way to access the cluster from the browser and fire SQL queries against the cluster. A DevOps Engineer with expertise in provisioning and managing servers on AWS and Software delivery lifecycle (SDLC) automation.

dev is the default database assigned by AWS when launching a cluster. There are three additional WLM settings we recommend using.

By using IAM, you can control who is active in the cluster, manage your user base and enforce permissions. You can view the newly created table and its attributes here: So here, we have seen how to create a Redshift cluster and run queries using it in a simple way. 1309 S Mary Ave Suite 210, Sunnyvale, CA 94087 This will take you to the Redshift console. .

Youre also protecting your workloads from each other.

After providing the unique cluster identifier, it will ask if you need to choose between production or free tier. Using both SQA and Concurrency Scaling will lead to faster query execution within your cluster. Easily load data from a source of your choice to Redshift without having to write any code using Hevo.

You can read more about the AWS Redshift query language from here. We can use databases to store and manage structured datasets, but that is not enough for analysis and decision-making. Assign the ad-hoc user group to this queue. So lets start with a few key concepts and terminology you need to understand when using Amazon Redshift.

If you wish to create your Redshift cluster in a different region, you can select the region of your choice.

Youre loading a large chunk of test data into dev, then running an expensive set of queries against it.

a new hire. Its where you load and extract data from. It can be modified even after the cluster is created, so we would not configure it for now. First-time users who intend to open a new AWS account can read this article, which explains the process of opening and activating a new AWS account. For example, you have dev and prod.

Now lets look at how to orchestrate all these pieces. Now, we will see how to use the AWS command-line interface to configure a Redshift cluster. You can either pause/terminate a cluster when not required depending upon your use-case. Once you are on the home page of AWS Redshift, you would find several icons on the left page which offers options to operate on various features of Redshift. Data apps are a particular type of user.

They are configuration problems, which any cloud warehouse will encounter without careful planning. Scheduled jobs that run workflows to transform your raw data.

No users are assigned apart from select admin users.

If the connection is established successfully, you can view the connected status at the top in the query data section. Navigate to the dashboard page by clicking on the dashboard icon on the left pane.

At first, using the default configuration will work, and you can scale by adding more nodes. Beyond control and the ability to fine-tune, the proposed set-up delivers major performance and cost benefits. What is true about Redshift is that it has a fair number of knobs and options to configure your cluster. Transform queries are high memory and predictable.

To do so, you need to unload/copy the data into a single database.

Its also the approach we use to run our own internal fleet of over ten clusters. Were covering the major best practices in detail in our post Top 14 Performance Tuning Techniques for Amazon Redshift.

Once you are on the cluster creating wizard, you would need to provide different details to determine the configuration of your AWS Redshift cluster. The whole database schema can be seen on the left side in the same section.

How to Configure Application Load Balancer With Path-Based Routing. Somebody else is now in charge, e.g. High scalability and concurrency, more efficient use of the cluster, and more control. In the next section, we will discuss how to create and configure the Redshift cluster on AWS using the AWS management console and command-line interface. We havent created any parameter for authentication using the secrets manager, so we will choose temporary credentials.

You will see that similar workloads have similar consumption patterns. Simplify your data analysis with Hevo today! Setting up your Redshift cluster the right way, Your Redshift cluster should have Two Schemas: raw and data, Your Cluster Should Have Four User Categories, Those 4 User Categories Should Fit into Three User Groups, 2. And that approach is causing problems now. +1-888-884-6405. Upon a complete walkthrough of the content, you will be to set up Redshift clusters for your instance with ease. If youre in the process of re-doing your Amazon Redshift set-up and want a faster, easier, and more intuitive way, thenset up a free trial to see your data in intermix.io.

For example, some of our customers have multi-country operations. A single user with a login and password who can connect to a Redshift cluster. To set up Redshift, you must create the nodes which combine to form a Redshift cluster.

You can set up more schemas depending on your business logic. By adding nodes, a cluster gets more processing power and storage. Linux Hint LLC, [emailprotected] MPP is flexible enough to incorporate semi-structured and structured data.

The person who configured the original set-up may have left the company a long time ago.

Rahul Mehta is a Software Architect with Capgemini focusing on cloud-enabled solutions. Users that load data into the cluster are also called ETL or ELT. Queries by your analysts and your business intelligence and reporting tools. If you are a new user, it is highly probable that you would be the root/admin user and you would have all the required permissions to operate anything on AWS.

Amazon Redshift Clusters are defined as a pivotal component in the Amazon Redshift Data Warehouse. That may not seem important in the beginning when you have 1-2 users.

After that, click on connect in the bottom-right corner. First, you have to select the connection which will be a new connection if you are going to use the Redshift cluster for the first time.

A picture tells a thousand words, so Ive summarized everything into one chart. Usually, the data which needs to be analyzed is placed in the S3 bucket or other databases. The corresponding view resembles a layered cake, and you can double-click your way through the different schemas, with a per-country view of your tables. Of course, youre not limited to two schemas.

Each queue gets a percentage of the clusters total memory, distributed across slots.

Thats because nobody ever keeps track of those logins. Queues are the key concept for separating your workloads and efficient resource allocation. The next step is to specify the database configuration.

But turns out these are not Redshift problems. A frequent situation is that a cluster was set up as an experiment and then that set-up grew over time. The additional configuration allows specifying details like network configuration, security, backup management, parameter and option groups that allow to control the behavior of the Redshift cluster and well as maintenance windows. Transform users run INSERT, UPDATE, and DELETE transactions.

Muhammad Faraz on Data Integration, Tutorials For the instructions to set up CLI credentials, visit the following article: https://linuxhint.com/configure-aws-cli-credentials/. Each node type comes with a combination of computing resources (CPU, memory, storage, and I/O).

The next detail is Node Type which determines the capacity of nodes in your cluster.

Instead, we cover the things to consider when planning your data architecture.

TICKIT contains individual sample data files: two fact tables and five dimensions. The default value for this setting will be No. With intermix.io, you can track the growth of your data by schema, down to the single table. This Redshift supports creating almost all the major database objects like Databases, Tables, Views, and even Stored Procedures. We call that The Second Set-up reconfiguring a cluster the right way, and ironing out all the kinks. Amazon Redshift is a petabyte-scale Cloud-based Data Warehouse service. You can change this configuration as needed or use the default values. In this article, we will explore how to create your first Redshift cluster on AWS and start operating it.

And then well dive into the actual configuration. A data warehouse is similar to a regular SQL database.

The default database name is dev and default port on which AWS Redshift listens to is 5439.

A cluster can have one or more databases. To avoid additional costs, we will use the free tier type for this demonstration purposes. Its a fast and intuitive way to understand if a user is running operations they SHOULD NOT be running. Using Redshift, you can query data about ten times faster than regular databases.

You can read more about Redshift node types from here. Depending on permissions, end-users can also create their own tables and views in this schema. Once you have a new AWS account, AWS offers many services under free-tier where you receive a certain usage limit of specific services for free.

They have a single login to a cluster, but with many users attached to the app.

Hevo, with its strong integration with 100+ sources & BI tools, allows you to not only export & load data but also transform & enrich your data & make it analysis-ready in a jiff.

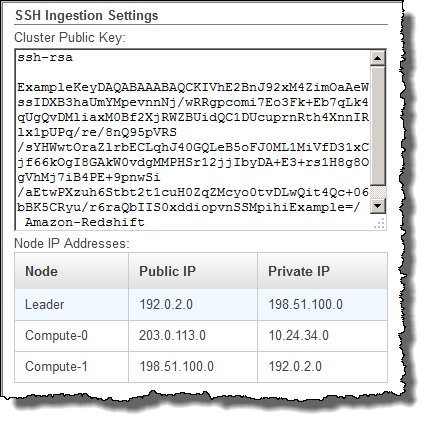

Provide the details as shown below and click on Connect to database button. Provide a password of your choice as per the rules mentioned below the password box.

It provides a consistent & reliable solution to manage data in real-time and always have analysis-ready data in your desired destination. A schema is the highest level of abstraction for file storage. On that note have you looked at our query recommendations? a user, a group, a role) to manage cluster access for users vs. creating direct logins in your cluster. Click on the Editor icon on the left pane to connect to Redshift and fire queries to interrogate the database or create database objects.

Load users run COPY and UNLOAD statements. Pricing for Redshift is based on the node type and the number of nodes running in your cluster.

With MPP, you can linearly scale your data to keep up with data growth. The name of the Redshift cluster must be unique within the region and can contain from 1 to 63 characters.

MPP architecture is christened that way because it lets various processors perform multiple operations simultaneously. With historic snapshots, you can restore an entire table or cluster seamlessly in just a few steps.

The transformation steps in-between involve joining different data sets. That may seem daunting at first, but theres a structure and method to use them. The pipeline transforms data from its raw format, coming from an S3 bucket, into an output consumable by your end-users. DC2 stands for Dense Compute Nodes, DS2 stands for Dense Storage and RA3 is the most advanced and latest offering from Redshift which offers the most powerful nodes having a very large compute and storage capacity. The raw schema is your abstraction layer you use it to land your data from S3, clean and denormalize it and enforce data access permissions. In AWS cloud, almost every service except a few is regional services, which means that whatever you create in the AWS cloud is created in the region selected by you. Through this article, you will get a deep understanding of the tools and techniques being mentioned & thus, it will help you hone your skills further on the Redshift Clusters. For more detail on how to configure your queues. From there, you can double-click on the schema to understand which table(s) and queries are driving that growth. A superuser has admin rights to a cluster and bypasses all permission checks.

Do not get alarmed by the status, as you may wonder that you are just creating your cluster and instead of showing a creating/pending/in-progress status, its showing modifying. One of the greatest advantages of data warehouse integration is having a single source of truth.

Little initial thought went into figuring out how to set up the data architecture.

And here, Redshift is no different than other warehouses. Using this, we can read a very large amount of data in a short period and study trends and relationships among it. With the right configuration, combined with Amazon Redshifts low pricing, your cluster will run faster and at a lower cost than any other warehouse out there, including Snowflake and BigQuery. Big data integration,

So, it is a very secure and reliable service which can analyze large sets of data at a fast pace. Once you get used to the command line and gain some experience, you will find it more satisfactory and convenient than the AWS management console. This completes the database level configuration of Redshift.

Thats helpful when e.g. Redshift operates in a clustered model with a leader node, and multiple worked nodes, like any other clustered or distributed database models in general.

Redshift is some kind of SQL database that can run analytics on datasets and supports SQL-type queries. We intend to use the cluster from our personal machine over an open internet connection. The data schema contains tables and views derived from the raw data. ), Marketo to PostgreSQL: 2 Easy Ways to Connect, Pardot to BigQuery Integration: 2 Easy Ways to Connect.

This is generally not the recommended configuration for production scenarios, but for first-time users who are just getting started with Redshift and do not have any sensitive data in the cluster, its okay to use the Redshift cluster with non-sensitive data over open internet for a very short duration.

In this article, we will discuss Redshift and how it can be created on AWS.

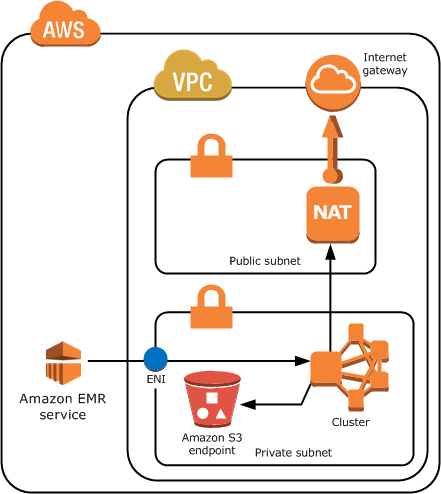

In the additional configurations section, switch off the Use Defaults switch, as we intend to change the accessibility of the cluster. You can learn more about AWS regions from this article. Once you are done using your cluster, it is recommended to terminate the cluster to avoid incurring any cost or wastage of the free-tier usage. Once you successfully log on, you would be navigated to a window as shown below. The two integral benefits of leveraging an Amazon VPC are as follows: To create Redshift Clusters in Amazon VPC, you can simply follow the steps given below: This article teaches you how to set up Redshift clusters with ease. But once youre anywhere between 4-8 nodes, youll notice that an increase in nodes doesnt result in a linear increase in concurrency and performance.

intermix.io is also in this category, as we run the UNLOAD command to access your Redshift system tables. Using IAM is not a must-have for running a cluster. All Rights Reserved.

These methods, however, can be challenging especially for a beginner & this is where Hevo saves the day. These queries are using resources that are shared between the clusters databases, so doing work against one database will detract from resources available to the other, and will impact cluster performance.

They use one schema per country.

We can create a single node cluster, but that would technically not count as a cluster, so we would consider a 2-node cluster. These are the key concepts to understand if you want to configure and run a cluster. The default username is an awsuser. It can provide backups in case of any failure for disaster recovery and has high security using encryption, IAM policies and VPC.

The separation of concerns between raw and derived data is a fundamental concept for running your warehouse.

At that point, customers experience one common reaction: Knowing what we know now, how would we set up our Redshift cluster had we do it all over again?.

Limit the number of superusers and restrict the queries they run to administrational queries, like CREATE, ALTER, and GRANT.

We are excited to announce that Integrate.io has achieved the BigQuery designation! Access AWS Redshift from a locally installed IDE, How to connect AWS RDS SQL Server with AWS Glue, How to catalog AWS RDS SQL Server databases, Backing up AWS RDS SQL Server databases with AWS Backup, Load data from AWS S3 to AWS RDS SQL Server databases using AWS Glue, Managing snapshots in AWS Redshift clusters, Getting started with AWS RDS Aurora DB Clusters, Saving AWS Redshift costs with scheduled pause and resume actions, Import data into Azure SQL database from AWS Redshift, Using Azure Purview to analyze Metadata Insights, Getting started with Azure Purview Studio, Different ways to SQL delete duplicate rows from a SQL Table, How to UPDATE from a SELECT statement in SQL Server, SQL Server functions for converting a String to a Date, SELECT INTO TEMP TABLE statement in SQL Server, SQL multiple joins for beginners with examples, INSERT INTO SELECT statement overview and examples, How to backup and restore MySQL databases using the mysqldump command, SQL Server table hints WITH (NOLOCK) best practices, SQL Server Common Table Expressions (CTE), SQL percentage calculation examples in SQL Server, SQL IF Statement introduction and overview, SQL Server Transaction Log Backup, Truncate and Shrink Operations, Six different methods to copy tables between databases in SQL Server, How to implement error handling in SQL Server, Working with the SQL Server command line (sqlcmd), Methods to avoid the SQL divide by zero error, Query optimization techniques in SQL Server: tips and tricks, How to create and configure a linked server in SQL Server Management Studio, SQL replace: How to replace ASCII special characters in SQL Server, How to identify slow running queries in SQL Server, How to implement array-like functionality in SQL Server, SQL Server stored procedures for beginners, Database table partitioning in SQL Server, How to determine free space and file size for SQL Server databases, Using PowerShell to split a string into an array, How to install SQL Server Express edition, How to recover SQL Server data from accidental UPDATE and DELETE operations, How to quickly search for SQL database data and objects, Synchronize SQL Server databases in different remote sources, Recover SQL data from a dropped table without backups, How to restore specific table(s) from a SQL Server database backup, Recover deleted SQL data from transaction logs, How to recover SQL Server data from accidental updates without backups, Automatically compare and synchronize SQL Server data, Quickly convert SQL code to language-specific client code, How to recover a single table from a SQL Server database backup, Recover data lost due to a TRUNCATE operation without backups, How to recover SQL Server data from accidental DELETE, TRUNCATE and DROP operations, Reverting your SQL Server database back to a specific point in time, Migrate a SQL Server database to a newer version of SQL Server, How to restore a SQL Server database backup to an older version of SQL Server. poor SQL statements dont consume excessive resources.

Data warehouses can store vast quantities of data. By default, an Amazon Redshift cluster comes with one queue and five slots. When spinning up a new cluster, you create a master user, which is a superuser.

You need to configure your cluster for your workloads. New account users get 2-months of Redshift free trial, so if you are a new user, you would not get charged for Redshift usage for 2 months for a specific type of Redshift cluster. After the successful connection, you can simply write your SQL query using the editor provided.

The data objects list the system objects and schemas. when your cluster is about to fill up and run out of space. You need to select Clusters from the Amazon Redshift Console, open the Table Restore tab, and select a date range to locate the snapshot. This service is only limited to operating in a single availability zone, but you can take the snapshots of your Redshift cluster and copy them to other zones.

View all posts by Rahul Mehta, 2022 Quest Software Inc. ALL RIGHTS RESERVED.

In this article, we covered the process of creating an AWS Redshift cluster and the various details that are required for creating a cluster.

The moment you allow your analysts to run queries and run reports on tables in the raw schema, youre locked in. Once youve chosen a snapshot, click on the Restore Table button and fill in the details in the Table Restore dialog box. And now they face the task of re-configuring the cluster. December 30th, 2021 So, select dc2.large node type which offers 160 GB of storage per node. Amazon VPC provides you Enhanced Routing, which allows you to tightly manage the flow of data between your Amazon Redshift cluster and all of your data sources. He works on various cloud-based technologies like AWS, Azure, and others. This will start creating your cluster and you would be navigated to the clusters window, where you would find the status of your cluster in Modifying status. For example, a long-running ad-hoc query wont block a short-running load query as they are running in separate queues. But it will help you stay in control as your company and Redshift usage grows.

Manjiri Gaikwad on Automation, Data Integration, Data Migration, Database Management Systems, Marketing Automation, Marketo, PostgreSQL, Manjiri Gaikwad on CRMs, Data Integration, Data Migration, Data Warehouse, Google BigQuery, pardot, Salesforce.

Firstly, provide a cluster name of your choice. The default value for the number of nodes is 2, which you can change as required.

You can either set the administrator password by yourself, or it can be auto-generated by clicking on the Auto generate password button. First, you need to configure AWS CLI on your system.

On the right-hand side of the screen, you would find a button named Create Cluster as shown above. (Select the one that most closely resembles your work. When a user runs a query, Redshift routes each query to a queue. He works on various cloud-based technologies like AWS, Azure, and others.